-

The Radio Ratings Process Is Broken

March 16, 2021

Have an opinion? Add your comment below. -

The ratings system is broken. The ridiculousness of the radio ratings process continues and it appears broadcasters are doing little about it.

Most every market has stories about crazy, unbelievably wild ratings swings traced to one or two households. Or, in some cases, one or two meters. Yet management continues to freak out over monthly moves and programmers make adjustments to programming based on data we know is unreliable.

Consider this:

Over 70,000 are packed into a stadium.

Now imagine that in one section of that stadium is a group of about 150 folks. They’re various ages, genders and races. Several primary languages are spoken. They live in different areas, work different jobs and belong to many different socio-economic classes.

Would it make sense to isolate these 150 people and, ask a series of questions and draw a conclusion that would be assumed true? Now consider this: What if those answers were projected to represent the entire stadium?

What useful information would we learn about their eating habits or what type of car they prefer? Or what magazines they read, which social media platforms they use, and whether they’re iPhone or Android users? The data would be utterly useless. And nobody in their right mind would consider it a representative sample.

Yet that’s what the broadcast industry allows the ratings companies to get away with. Except it’s actually worse.

The Radio Ratings Process is Ridiculous-and Broken

The ratings company recruits a sample either by going door-to-door or by telephone. That should tell you enough about it already.

How many people do you know that would pick up the phone when an unknown number calls? Or answer the door for a stranger that wants something from them?

Oh, and it’s not as simple as answering a few questions. In a PPM market, respondents (and their families) must carry a pager-like device for a year (or more) to record their listening behavior. That’s like following the fans in the stadium for a year to track all the food they eat.

Seriously, who’s going to do that? Real people? Of course they won’t. Ratings respondents are unique.

Consultant, researcher and programming guru Dennis Constantine tells me:

“Any good researcher will tell you that you should never have two people in a study that know each other. That’s why we don’t just invite a group of friends or family members together to a perceptual study or music test. Yet, radio ratings invite the whole family – sometimes up to 8 members of a family. And then, they play games with the participants to get them to listen more. The more you listen, the more money you make. Results are that one diary or one family can make or break a radio station.”

Clearly, it is anything but a representative sample. But that is the radio ratings process.

Ratings Case Study: One Meter’s Impact

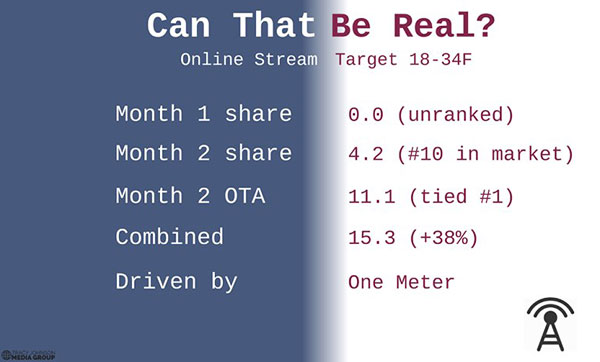

Maybe that’s why the placement of one meter caused a 38% increase for a station on a market’s ratings. This is a real-life ratings story.

It happened to one of my client stations (in our favor). If I hadn’t experienced it first-hand, I probably wouldn’t believe it. It all happened because of just one meter. One.

This may shock you, but then again, maybe not. It’s a lot more common than it should be. What do you expect? Respondents are asked to carry or wear a pager-like devices straight out of the 90s.

It’s attracting a unique type of person.

Here are the facts in this case:

- It’s a major market, in the top 20 in the USA. The 32 rated radio stations in the metro don’t include signals coming in from nearby cities.

- In one month, the station’s online stream grew from nowhere…literally a 0.0…to a 4.2 share in our (broad) target demographic.

- This made the station’s online stream the 10th highest-ranked station in the market

- The over-the-air signal for this station was tied for #1 in that target demo with an 11.1 share.

- If you add the ratings from the online stream (4.2) to the over-the-air share (11.1), the station has a 15.3 share. That’s an incredible gain of 38%

Now, here’s the kicker. If you’re not already sitting down, this would be a good time. We traced the online growth.

It Was One Meter

One meter would have driven the station’s ratings by 38%. Four shares from one meter? This should never happen, no matter how narrow the demographic.

Ratings Case Study #2

Imagine that you must decide the future direction of your radio station. Let’s say you are considering a format change.

You commission a perceptual research project to measure the health of the brand, current opportunities and available positions in the market. The only thing that’s certain is that the station must win with 18-34 women.

The research company reports back with their findings based on the following sample:

W 18-24: 58 respondents

W 25-34: 89 respondents

Total W 18-34: 147 respondents

This doesn’t take into account ethnicity, primary language spoken at home or listening preferences. Just raw numbers.

Go ahead. Make the decision that will affect the station’s future. And perhaps your career.

If the target is 18-24 year old women (typical for a CHR), 58 responses isn’t even enough for a valid sample to make weekly music decisions. That’s still true even if they were screened by music or station preference.

And this sample from Nielsen includes rock fans, country fans, jazz fans, Christian music listeners, etc.

Actual Market Data

Basing important decisions on information gathered from this sample should be grounds for termination. It’s irresponsible.

I don’t know of a single research company that would present this sample to a broadcaster.

Here’s the thing: That’s the actual in-tab sample from a Nielsen Ratings Report in one of the markets I work with. Not a weekly. A full report.

In a Top 20 market with a population over 2 million. 58 women age 18-24 carry meters that determine the ratings for all stations in the market.

This research is the basis for millions of dollars in advertising. And broadcasters are paying how much for this data? Really? We collectively sell this to advertisers that actually have confidence that their marketing investment is in good hands?

I have a (fairly) strict personal code to avoid criticizing unless I can offer a solution. I have no solution to Nielsen’s sample crisis. But I have a warning to broadcasters: This must stop before our advertisers wake up and realize what their ad rates have been based on.

This is absurd. Something must be done.

Conclusion

It sounds ridiculous, doesn’t it? It is.

The radio ratings process is broken. And it may be too late to fix.

Yet, as long as we allow the existing system to remain in place, and fund the companies producing the ratings “estimates” by paying hundreds of thousands of dollars a year, it’s the game we play.

At least until advertisers figure out what’s going on here and stop placing media buys based on it.

-

-